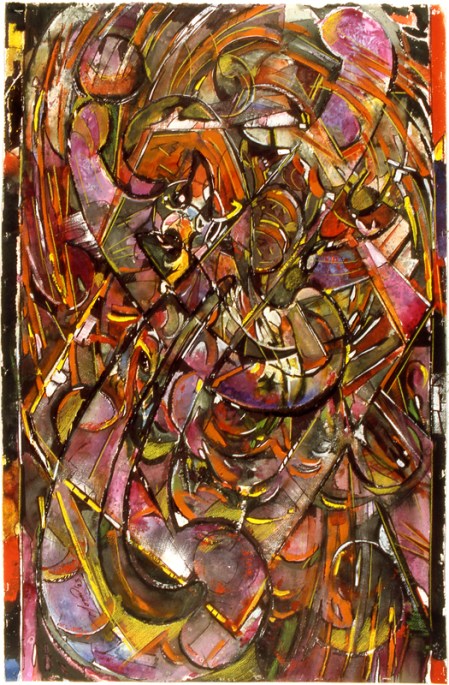

Ricardo Morín

Bulwark

Formerly titled Buffalo Series, Nº 3

Oil on linen, 60 × 88 in.

1980

Exhibited: Hallwalls Contemporary Arts Center, Buffalo, New York, May 1980

Destroyed while in third-party custody; extant as digital archival record only.

*

Ricardo F. Morin

December 23, 2025

Kissimmee, Fl

*

I did not encounter the boundaries that would later govern my writing either through instruction or doctrine, but through a remark made in passing by my father when I was still a child. He stated, without hesitation or elaboration, that he could not imagine existing under a political system that threatened individual liberty and private autonomy, and that life under such conditions would no longer be a life he could inhabit. The formulation was extreme, yet even then it was clear that it was not intended as a proposal, a threat, or a performance. It functioned instead as a boundary: an indication of where survival, once stripped of dignity, would no longer merit the name of living.

The force of that remark did not reside in its literal content, but in the clarity with which it established a limit. Extreme statements often draw attention by excess, but this one operated differently. It did not seek reaction or allegiance. It closed a door. What it marked was the point at which judgment ceased to be negotiable—not because compromise becomes difficult, but because continuation itself loses coherence. What it marked was not expression but diagnosis. It identified a threshold beyond which endurance would amount to acquiescence in one’s own negation.

That distinction—between living and merely persisting—would take years to acquire its full weight. One can remain alive and yet no longer inhabit the conditions under which action, responsibility, and choice remain intelligible. The body endures; the terms of authorship do not. What is surrendered in such cases is not comfort or advantage, but authorship over one’s own conduct: the capacity to remain the source and bearer of one’s actions.

Only later did historical irony give that childhood memory a broader frame. My father died one year before Venezuela entered a prolonged political order that normalized civic humiliation and displaced individual responsibility. This coincidence does not confer foresight or vindication. It merely underscores the nature of the limit he articulated. He did not claim to predict outcomes or to possess superior insight. He identified a condition he would not inhabit, regardless of how common, administratively justified, or socially enforced it might become.

What was transmitted through that remark was not an ideology, nor even a political position, but a refusal. It was a refusal to treat dignity as contingent, and a refusal to accept adaptation as inherently neutral. Such refusals are not dramatic. They do not announce themselves as virtues. They operate quietly, shaping what one will not do, what one will not say, and what one will not permit to pass through one’s actions in exchange for continuity, safety, or approval.

Writing, I have come to understand, is not exempt from the constraints that govern action. Symbolic form does nor suspend responsibility. Language acts. It frames possibilities, distributes responsibility, and licenses certain responses while it forecloses others. To write without regard for what one’s words enable is to treat expression and conduct as if they belonged to different orders. They do not. The same boundary that governs action governs language: one must not inhabit forms that require the habitual abandonment of autonomy.

Authorial responsability does not entail moral exhibition or the performance of virtue. Responsibility in writing does not consist in adopting the correct posture or aligning with approved conclusions. It consists in refusing methods that rely on coercion, humiliation, or rhetorical pressure in place of clarity. It requires attention not only to what is asserted, but to what is permitted to continue through tone, implication, and omission. Precision here is not a stylistic preference; it is a moral discipline.

Restraint, in this sense, is not passivity but a method of authorship. It is a form of interruption in the circulation of what one does not consent to carry forward. To decline to amplify what one does not consent to carry is an act of selection, and an exercise of agency. In an environment where excess, outrage, and reactive urgency are often mistaken for seriousness, restraint becomes a way of maintaining authorship over one’s participation. Restraint limits reach, but it preserves coherence between what is said and what is lived.

Such restrain inevitably carries a cost. Urgency is more than speed; it is the condition under which reflection itself begins to appear as a liability. Reflection serves as a procedural safeguard of agency and authorship—and, with them, of ethical responsibility—even when circumstances cannot be governed and one is compelled to choose within constraint. Restraint resists urgency, narrows reach, and foregoes certain forms of recognition. These losses are not incidental; they are constitutive. To accept all available registers or platforms in the name of relevance is to treat survival as the highest good. The boundary articulated long ago indicates otherwise: that there are conditions under which continuation exacts a price too high to pay.

Authorial responsibility, then, is not a matter of expression but of alignment between language and action. It asks whether one’s language inhabits the same ethical terrain as one’s conduct. It asks whether the forms one adopts require compromises one would refuse in action. The obligation is not to persuade or to prevail, but to remain answerable to the limits one has acknowledged.

What remains is not a doctrine but a stance: a stance standing without dramatization, without escape, and without concession to forms that promise endurance at the expense of dignity. Such a posture does not announce itself as resistance, nor does it seek exemption from consequence. It holds its ground without appeal. In doing so, it affirms that authorship—like autonomy—begins where certain lines are no longer crossed.

*

What remains unaddressed is the more fragile condition beneath authorship itself: the way thinking precedes command, and at times repositions the author before any stance can be assumed.

The memory of my father appears as a moving target—not an idea slipping out of control, but it is a standard shifting under my feet while I was still advancing. I did not invite it in the sense of intention or plan. Nor did I resist it. I noticed it moving before I could decide what it demanded.

That experience is unsettling because it violates a comforting assumption: that thought is something we deploy, rather than something that displaces us.

The uncertainty about whether I had invited it is itself a sign that I was not instrumentalizing my thinking. When thought is summoned as a tool, it remains fixed. When it emerges in response to something that matters, it moves, because it is adjusting to reality rather than arranging it. That movement feels like a loss of control only if authorship is understood as command.

I allowed the discomfort of not knowing whether I had summoned what was now demanding attention only if authorship is understood as control. This was resistance under motion, not paralysis of judgment. The question arises only when thinking is still alive enough to be displaced.

The target moved because it was attached to the terrain of perception, not to the self doing the perceiving.